Grape Escape Showcases Apparancy and SYSPRO

RFG Perspective: Cost efficiencies, elimination of redundancy, and delivery of timely accurate information to users anywhere, anytime and on any device remains a top priority across the business landscape. In the manufacturing and distribution sectors U.S. business executives in small- and medium-sized (SMBs) companies have struggled like Sisyphus and the boulder to maintain their organizations; many have been snuffed out entirely. A new survey showing that manufacturing in the US is on the rise should spur cautious optimism among business executives. However, now more than ever these businesses need business process management (BPM) and/or enterprise resource planning (ERP) solutions to remove cost and redundancy and deliver just in time and timely information to executives and their staff wherever, whenever and on whatever device. In the healthcare sector the passage of the Affordable Care Act has been met with both criticism and praise. Its future is uncertain. What is certain, however, is that the Veterans Administration scandal has focused the lens on a persistent, growing problem: Veterans have to file a morass of forms to claim benefits they both need and deserve. The implementation of innovative technologies aimed at untangling and simplifying Veterans' benefits claims and scheduling processes as well as a cultural change that supports the technology would be a giant leap forward for these praiseworthy and selfless individuals.

The JRocket Marketing Grape Escape ® 2014 provided industry analysts with a rare insider’s peek at two of today's innovative, nimble, and multi-faceted technology vendors. The three-day event was a tour de force that showcased Apparancy and SYSPRO, two disruptive leading-edge companies that are reshaping their industry sectors.

Apparancy

Apparancy is delivering on its Know. Do. Prove. value proposition with an automated business process platform that initially aims at helping healthcare organizations connect multiple existing systems and data sources to achieve specific goals. At this year's event, Karen Watts, CEO of Apparancy, expounded on how Apparancy can help these organizations identify disjointed workflows, and eliminate redundancies and data overlap, by combining data and processes into single-purpose role-based views that eliminate the need to rip and replace.

The big news was Ms. Watts' announcement – appropriately - on Memorial Day that Apparancy had acquired usage rights to an earlier software product "TurboVet," which had already catalogued some five thousand plus Veteran's Administration forms. Apparancy has begun work on updating and integrating these forms into its platform with the end game of launching VetApprove in Q4 2014. If necessity is the mother of invention then, Apparancy, powered by Corefino, is filling a market vacuum with its VetApprove Veterans benefit product. VetApprove will revolutionize the way Veterans will be able to access their entitled benefits.

VetApprove will enable 22 million Veterans to apply for entitled healthcare, employment, education, state compensation, and disability benefits, as well as for other entitlement programs such as funeral benefits extended to spouses of veterans. This service will be offered to veterans free of charge. Additionally, it can become the underlying workflow management platform that would enable the VA to efficiently process applications, schedule services, and monitor and manage its operations, which are still antediluvian and lack accurate measurement metrics.

SYSPRO

Joey Benadretti, President of SYSPRO USA, announced a turnabout in US manufacturing trends. Citing MAPI survey findings, Mr. Benadretti pointed to a potential upswing in the future of US manufacturing. The study, which covered the period from 2006-2012, showed 19 states experiencing double-digit growth above the national average with the majority of those in the western states. He also pointed out that US manufacturing is moving from Mexican Border States (except Texas) to those states that are closer to the Canadian border. Output in two sectors is also accelerating and to address these trends SYSPRO is expanding into the automotive and energy manufacturing sub-industries.

On the product side the company's new SYSPRO Espresso provides an enterprise mobile ecosystem that can be tailored to satisfy front-end and back-end requirements. Features of the highly anticipated SYSPRO Espresso will include new drag and drop technology and mass customization for any device with an emphasis on being device agnostic. This ground-breaking technology supports single sign-on, is device-agnostic, and allows users to access multiple applications on multiple devices using a roaming profile so they can switch from one device to another and instantly connect. This creates an advantage for both the customer as well as SYSPRO, as the millennial generation will want to access ERP on their mobile devices. These tech-savvy users will also want to customize which apps they will want to see on their mobile phones, tablets, etc. due to the device real estate.

Not surprisingly, SYSPRO currently has one of the highest customer retention rates in the industry. Mr. Benadretti confidently remarked that his company will remain on the cutting edge of technology providing customers with product flexibility and low-cost solutions.

RFG POV: Apparancy and SYSPRO unveiled substantive, cutting edge, and innovatively disruptive technology solutions. Apparancy’s targeted focus will enable the beleaguered VA to begin to meet the urgent needs of its Veterans, while SYSPRO is enabling manufacturing executives to meet customer demands as the industry undergoes an uptick in growth and a geographic shift. Business, government and IT executives should proactively harness spot-on technology solutions to solve exigent business problems, respond expeditiously to clients, and manage change well into the future so that their organizations continue to satisfy customers and remain relevant as markets evolve.

Additional relevant research and consulting services are available. Interested readers should contact Client Services to arrange further discussion or interview with Ms. Maria DeGiglio, MA, and Principal Analyst.

Cybersecurity and the Cloud Multiplier Effect

RFG Perspective: While corporate boards grapple with cybersecurity issues and attempt to shore up their defenses, the inclusion of cloud computing models into the equation are increasing the risk exposure levels. Business and IT executives should work together to aggressively establish processes, procedures, and technology that will minimize the risk exposures to levels deemed acceptable. Additionally, senior executives and Boards of Directors need to play a more active roll in the accountability and governance of cybersecurity by discussing and addressing challenges, issues and status at least quarterly.

An article on the front page of the Wall Street Journal on June 30, 2014 discussed corporate boards racing to shore up cybersecurity. It alluded to a number of corporate boards waking up to cyber threats and worrying that hackers would steal company know-how and intellectual property (IP). In the first half of 2014 1,517 NYSE- or NASDAQ-traded companies listed in their securities filings references to some form of cyber attack or data breach – almost a 20 percent increase from the previous year. In all of 2013 1,288 such filing comments were made whereas in 2012 only 879 companies reported cyber statements. This is good and bad news – good that cybersecurity is getting CEO and Board attention and bad news in that executives are belatedly waking up to an endemic problem.

Fiduciary Responsibility

The Board and CEO have a fiduciary responsibility to shareholders to protect the company's assets from undue risks. It is not something that can be assigned and then ignored. Yet that is what has happened at many companies over the years. They must be involved in cybersecurity governance and decision-making on an ongoing basis and not shunt it off to Chief Risk Officers (CROs), Chief Security Officers (CSOs or CISOs) and/or IT executives. CEOs and other senior executives should also ensure privacy and security programs are aligned with each business unit's requirements and that the risk probability and exposures are reasonably known and reduced to an acceptable level. It is important that all parties understand that zero security risks are not possible anymore (nor would the expense be worth it if attainable); what is important is to agree upon what level of risk exposure is acceptable, budget for it, and implement initiatives to make it happen.

At the Board level there should be a risk committee that is responsible for all risk management, including cyber risk. Moreover, best practices suggest Boards should, as a minimum, address the following five areas:

- regularly reviews and approves top-level policies on privacy and IT security risks

- regularly reviews and approves roles and responsibilities of lead personnel responsible for privacy and IT security

- regularly reviews and approves annual budgets for privacy and IT security programs separate from IT budgets

- regularly reviews and approves cyber insurance coverage

- regularly receives and acts upon reports from senior management regarding privacy and IT security risk exposures.

These efforts can be done by the full Board or by a risk committee that reports to the Board. Some Boards may have assigned this role to the audit committee but, while it is good that it is addressed, it is not a perfect fit.

Cloud Multiplier Effect

In June the Ponemon Institute LLC published a report on the cloud multiplier effect. The firm surveyed 613 IT and IT security practitioners in the U.S. that are familiar with their companies' usage of cloud services. The news is not good. Because most respondents believe cloud security is an oxymoron and certain cloud services can result in greater exposures and more costly breaches, the use of cloud services multiplies the breach costs by a factor between 1.38 and 2.25. The top two impacts are from cloud breaches involving high value IP and the backup and storage of sensitive or confidential information, respectively. Most respondents believe corporate IT organizations are not properly vetting cloud platforms for security, are not proactively assessing information to ensure sensitive or confidential information is not in the cloud, and are not vigilant on cloud audits or assessments.

Moreover, disturbingly, almost 75 percent of respondents believe their cloud services providers would not notify them immediately if they had a data breach involving the loss or theft of IP or business confidential information. Almost two-thirds of those surveyed expressed concern that their cloud service providers are not in full compliance with privacy and data protection laws – and this is in the U.S. where the rules are less strict than the EU. Furthermore, respondents feel there is a lack of visibility into the cloud as it relates to applications, data, devices, and usage.

Summary

Boards, CEOs and senior non-IT management need to become more aware of their cybersecurity exposures and actively participate in minimizing the risks. IT executives, on the other hand, need to present the challenges, status and trends in a more business, less technical manner, including recommendations, so that the other executives can appreciate the issues and authorize the appropriate actions. As the Ponemon study shows, the challenges go beyond the corporate four walls into clouds they have no control over. IT executives need to become involved in the selection and vetting of cloud services providers. Furthermore, business and IT executives must work together and build strong governance practices to minimize cybersecurity risks.

RFG POV: Cybersecurity risk exposures are increasing and collectively executives are falling short in their fiduciary responsibilities to protect company assets. Boards, CEOs and other senior executives must take their accountability seriously and play a more aggressive role in ensuring the risk exposures to corporate assets are known and within acceptable levels. For most organizations this will be a major cultural change and challenge and will require IT executives to proactively step forward to make it happen. IT executives should collaborate with board members, senior executives, and outside compliance services providers to establish a program that will enable executives to establish a governance methodology that monitors and reports on the risks and provides cost/benefit analyses of alternative corrective actions. Moreover, at a minimum, corporate executives must review the governance materials quarterly, and after critical risk events occur, and take appropriate actions.

Cyber Security Targets

RFG Perspective: While the total cost of the cybersecurity breach at Target will not be know for quite a while, a reasonable estimate is that it could easily cost the company more than $500 million. The price tag includes bills associated with fines from credit card companies, other fines and lawsuits for non-compliance, services such as free credit card report monitoring for its impacted 70 -110 million customers, and discounts required to keep customers coming in the door. These costs far exceed the IT costs associated with better cybersecurity prevention. Target is not alone; it is just the latest in a long line of breaches that have taken major tolls on the attacked organization. Business and IT executives need to recognize that attackers and hackers will constantly change their multi-pronged sophisticated attack strategies as they attempt to stay ahead of the protections installed in the enterprises. IT executives need to be constantly aware of the risk exposures and how they are changing, and continue to invest in measured, integrated cybersecurity solutions to close the gaps.

The Target cyber breach represents a new twist to the long-standing cybersecurity challenge. Unlike most other attacks that came through direct probes into the corporate network or through employee social-engineered emails, spear phishing, or multi-vectored malware aimed at IT software, the Target incident was an Operations Technology (OT) play. One reason for this may be that the vendor patch rate has improved and successes of zero-day exploits are dropping. Of course, it could also be that the misguided actors were clever enough to try a new attack vector.

IT vs OT

Most IT executives and staff give little thought to OT software, usually referred to as SCADA (supervisory control and data acquisition) software. These are industrial control systems that monitor and control things such as air conditioning, civil defense systems, heating, manufacturing lines, power generation, power usage, transmission lines, and water treatment. IT (outside of the utilities industry) tends to treat these systems and the associated software as outside of their purview. This is no longer true. Cyber attackers are constantly upping the ante and now they have begun going after OT software in addition to traditional attack vectors. IT executives and security personnel need to become actively engaged in ensuring the organization is protected against these types of threats.

Incident Attack Types

In 2013 according to the IBM X-Force Threat Intelligence Quarterly 1Q2014, the top three disclosed attack types are distributed denial of service (DDoS), SQL injections, and malware. These three vectors account for 43 percent of 8,330 vulnerability disclosures while another 46 percent of attack types remain undisclosed. (See below chart from the IBM report.) The report also points out that Java vulnerabilities continue to rise year-over-year with them tripling in the last year alone. Fully half of the exploited application vulnerabilities were Java based, with Adobe Reader and Internet browsers accounting for 22 and 13 percent respectively. Interestingly, mobile devices excluding laptops have yet to be a major threat attack point.

Currency

Another common pressure point on IT organizations is keeping current with all the security patches authorized by software providers. The good news is that vendors and IT organizations are doing a better job applying patches. The overall unpatched publicly-disclosed vulnerability rate dropped from 41 percent in 2102 to 26 percent in 2013. This is great progress but still much remains to be done, especially by enterprise IT. The amount of patches to be applied on an ongoing basis can be overwhelming and many IT organizations cannot keep up, especially with quick fixes. Thus, zero-day exploits still remain major threats that IT needs to mitigate.

Playing Defense

The challenge for IT CISOs and security staff increases every year as the number and types of actors attempting to gain access to IT systems continues to grow as do the types of attacks. Therefore, enterprises must reduce their risk exposure by using monitoring and blocking software that can rapidly detect problems almost as they occur and shut off attacks immediately before the exposure becomes too large. Additionally, staff must fine-tune access controls and patch known vulnerabilities quickly so as to (virtually) eliminate the ability for criminals to exploit holes in infrastructures. Security executives and staff should work collaboratively with others in their field and share information about attacks, defenses, meaningful metrics, and trends. IT executives should ensure security personnel are continually trained and aware of the latest trends and are implementing the appropriate defenses as rapidly as possible. As people are one of the weakest links in the security chain, IT executives should also ensure all employees are aware of company privacy and security policies and procedures and are judiciously following them.

RFG POV: IT executives and cyber security staff remain behind the curve in protecting, exfiltrating, discovering, and containing cyber security attacks and data breaches. There are some low-hanging initiatives IT can execute to close some of the major vulnerabilities such as blocking troublesome IP addresses at the perimeter outside the firewall and employing enhanced software monitoring tools that can spot and alert security of suspect software. Additionally, staff can improve password requirements, password change frequency, two-factor authentication, inclusion of OT software, and rapid deactivation of access (cyber and physical) to terminated employees. Encryption of data at rest and in transit should also be evaluated. However, IT are not the only ones on the line for corporate security – the board of directors and corporate executives share the fiduciary burden for protecting company assets. IT executives should get boards and corporate executives to understand the challenges, establish the acceptable risk parameters, and play an ongoing role in security governance. IT security executives should work with appropriate parties to collect, analyze, and share incident data so that defenses and detection can be enhanced. IT executives should also recognize that cyber security is not just about technology – the weakest links are the people and processes. These gaps should be aggressively pursued and the problems regularly communicated across the organization. The investment in these corrective actions will be far less than the cost of fixing the problem once the damage is done.

Have You Been Robbed on the Last Mile of Sales?

It is a fair question, whether you are the seller or the customer. OK, so what is the last mile of sales? I didn't find an official definition, so I'm borrowing the concept from "the last mile of finance" between balance sheet and 10-k, and the "last mile of telecommunications" that is the copper wire from the common carrier's substation to your home or business. Let's call the last mile of sales

that part of the sales funnel in which prospects are ready to become customers, or are already customers, ready for up-selling and cross-selling.

Survey Participants were robbed on the Last Mile of Sales!

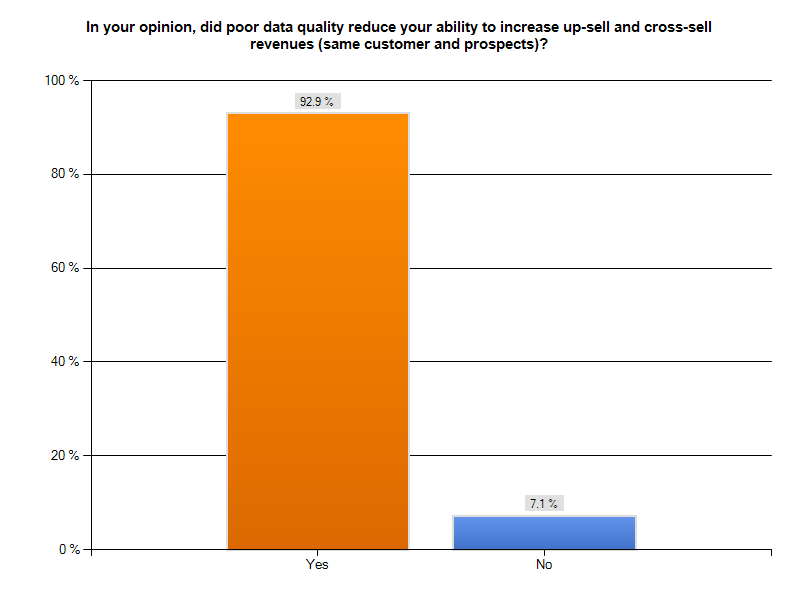

This Tuesday morning, I looked at our "Poor Data Quality - Negative Business Outcomes" survey results and I noticed a surprising agreement among participants in one sales-related area. 126 respondents, or over 90%of those responding to our question about poor data quality compromising up-selling and cross-selling, indicated they had such a problem. The graph following gives you a sense of how large a percentage of respondents had lost sales opportunities.

This is a troubling statistic. Organizations spend huge sums on marketing programs designed to attract prospects and nurture them to become customers. Beyond direct monetary investment, ensuring a successful trip down the sales funnel takes time, effort, and ability. From the perspective of the seller, failing to sell more products and services to an  existing (presumably happy) client is like being robbed on the last mile of sales. Your organization has already succeeded in making a first sale. Subsequent selling should be easier, not harder. From the perspective of the buyer, losing confidence in your chosen vendor because they fail to know you and your preferences, confuse you with similarly named customers, or display inept record-keeping about their last contact with you, robs you of a relationship you had invested time and money in developing. Perhaps now your go-to vendor becomes your former vendor, and you must spend time seeking an alternate source. Once confidence has been shaken, it is difficult to rebuild.

existing (presumably happy) client is like being robbed on the last mile of sales. Your organization has already succeeded in making a first sale. Subsequent selling should be easier, not harder. From the perspective of the buyer, losing confidence in your chosen vendor because they fail to know you and your preferences, confuse you with similarly named customers, or display inept record-keeping about their last contact with you, robs you of a relationship you had invested time and money in developing. Perhaps now your go-to vendor becomes your former vendor, and you must spend time seeking an alternate source. Once confidence has been shaken, it is difficult to rebuild.

What did the survey say?

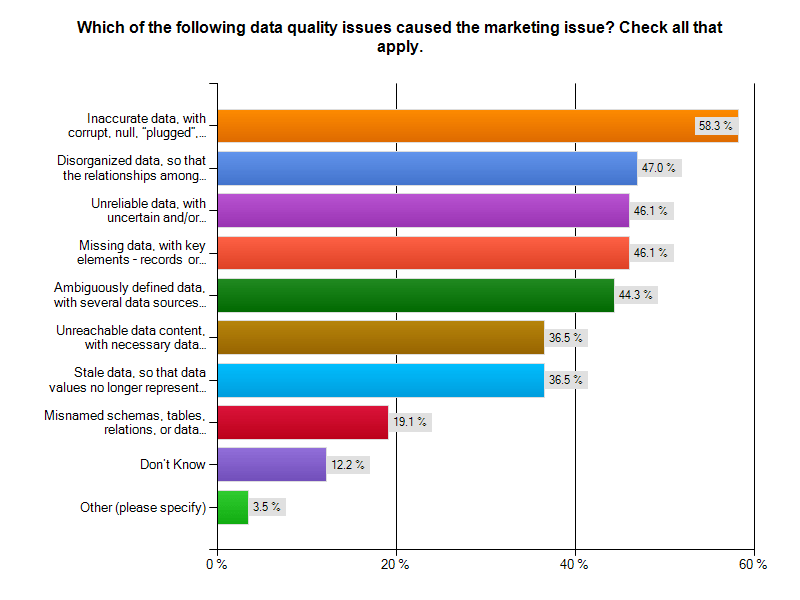

How is it possible that more than 90% of our respondents to this question lost an opportunity to up-sell or cross-sell? The next chart tells the story. It is poor data quality, plain and simple.  You can read the results yourself. As a sales prospect for a lead generation service, I had a recent experience with at least one of the top four poor data quality problems.

You can read the results yourself. As a sales prospect for a lead generation service, I had a recent experience with at least one of the top four poor data quality problems.

Oops, the status wasn't updated after our last call

In the closing months of 2013, I was solicited by a lead generation firm. I asked them to contact me in the first quarter of 2014. Ten days into 2014, they called again. OK, perhaps a bit early in the quarter, but they are eager for my business. With no immediate need, I asked them to call me again in Q3-2014 to see how things were evolving. So, I was surprised when I received another call from that firm, yesterday. Had we traveled through a time-warp? Was it now mid-summer? A look out the window at the snowstorm in progress suggested it was still February 2014. The caller was the same person as last time, and began an identical spiel. I interrupted and mentioned we had only spoken a week earlier. The caller appeared to remember and agree, indicating that there was no status update about the previous call. Was this sloppy ball-handling by sales, an IT technology issue, an ill-timed database restore? Was this a 1:1,000,000 chance or an everyday occurrence? The answer to all of those questions is "I have no idea, but I don't want to trust these folks with managing my lead generation campaign". If they can't handle their own sales process, how are they going to help me with mine? What ever the cause of the gaff, they robbed themselves of a prospect, and me of any confidence I might have had in them.

The Bottom Line

Being robbed on the last mile of sales by poor data quality is unnecessary, but all too common. Have you recently been robbed on the last mile of sales? Are you a seller, or a disappointed prospect or customer? Cal Braunstein of The Robert Frances Group and I would like to hear from you. Please do contact me to set up an appointment for a conversation. Whether you have already participated in our survey, are a member of the InfoGov community, or simply have an enlightening experience about how poor data quality caused you to have a negative business outcome, reach out and let us know.

Published by permission of Stuart Selip, Principal Consulting LLC

Predictions: Tech Trends – part 1 – 2014

RFG Perspective: The global economic headwinds in 2014, which constrain IT budgets, will force IT executives to question certain basic assumptions and reexamine current and target technology solutions. There are new waves of next-generation technologies emerging and maturing that challenge the existing status quo and deserve IT executive attention. These technologies will improve business outcomes as well as spark innovation and drive down the cost of IT services and solutions. IT executives will have to work with business executives fund the next-generation technologies or find self-funding approaches to implementing them. IT executives will also have to provide the leadership needed for properly selecting and implementing cloud solutions or control will be assumed by business executives that usually lack all the appropriate skills for tackling outsourced IT solutions.

As mentioned in the RFG blog "IT and the Global Economy – 2014" the global economic environment may not be as strong as expected, thereby keeping IT budgets contained or shrinking. Therefore, IT executives will need to invest in next-generation technology to contain costs, minimize risks, improve resource utilization, and deliver the desired business outcomes. Below are a few key areas that RFG believes will be the major technology initiatives that will get the most attention.

Source: RFG

Analytics – In 2014, look for analytics service and solution providers to boost usability of their products to encompass the average non-technical knowledge worker by moving closer to a "Google-like" search and inquiry experience in order to broaden opportunities and increase market share.

Big Data – Big Data integration services and solutions will grab the spotlight this year as organizations continue to ratchet up the volume, variety and velocity of data while seeking increased visibility, veracity and insight from their Big Data sources.

Cloud – Infrastructure as a Service (IaaS) will continue to dominate as a cloud solution over Platform as a Service (PaaS), although the latter is expected to gain momentum and market share. Nonetheless, Software as a Service (SaaS) will remain the cloud revenue leader with Salesforce.com the dominant player. Amazon Web Services will retain its overall leadership of IaaS/PaaS providers with Google, IBM, and Microsoft Azure holding onto the next set of slots. Rackspace and Oracle have a struggle ahead to gain market share, even as OpenStack (an open cloud architecture) gains momentum.

Cloud Service Providers (CSPs) – CSPs will face stiffer competition and pricing pressures as larger players acquire or build new capabilities and new, innovative open-source based solutions enter the new year with momentum as large, influential organizations look to build and share their own private and public cloud standards and APIs to lower infrastructure costs.

Consolidation – Data center consolidation will continue as users move applications and services to the cloud and standardized internal platforms that are intended to become cloud-like. Advancements in cloud offerings along with a diminished concern for security (more of a false hope than reality) will lead to more small and mid-sized businesses (SMBs) to shift processing to the cloud and operate fewer internal data center sites. Large enterprises will look to utilize clouds and colocation sites for development/test environments and handling spikes in capacity rather than open or grow in-house sites.

Containerization – Containerization (or modularization) is gaining acceptance by many leading-edge companies, like Google and Microsoft, but overall adoption is slow, as IT executives have yet to figure out how to deal with the technology. It is worth noting that the power usage effectiveness (PUE) of these solutions is excellent and has been known to be as low as 1.05 (whereas the average remains around 1.90).

Data center transformation – In order to achieve the levels of operational efficiency required, IT executives will have to increase their commitment to data center transformation. The productivity improvements will be achieved through the use of the shift from standalone vertical stack management to horizontal layer management, relationship management, and use of cloud technologies. One of the biggest effects of this shift is an actual reduction in operations headcount and reorientation of skills and talents to the new processes. IT executives should look for the transformation to be a minimum of a three year process. However, IT operations executives should not expect clear sailing as development shops will push back to prevent loss of control of their application environments.

3-D printing – 2014 will see the beginning of 3-D printing taking hold. Over time the use of 3-D printing will revolutionize the way companies produce materials and provide support services. Leading-edge companies will be the first to apply the technology this year and thereby gain a competitive advantage.

Energy efficiency/sustainability – While this is not new news in 2014, IT executives should be making it a part of other initiatives and a procurement requirement. RFG studies find that energy savings is just the tip of the iceberg (about 10 percent) that can be achieved when taking advantage of newer technologies. RFG studies show that in many cases the energy savings from removing hardware kept more than 40 months can usually pay for new better utilized equipment. Or, as an Intel study found, servers more than four years old accounted for four percent of the relative performance capacity yet consumed 60 percent of the power.

Hyperscale computing (HPC) – RFG views hyperscale computing as the next wave of computing that will replace the low end of the traditional x86 server market. The space is still in its infancy, with the primary players Advanced Micro Devices (AMD) SeaMicro solutions and Hewlett-Packard's (HP's) Moonshot server line. While penetration will be low in 2014, the value proposition for HPC solutions should be come evident.

Integrated systems – Integrated systems is a poorly defined computing technology that encompasses converged architecture, expert systems, and partially integrated systems as well as expert integrated systems. The major players in this space are Cisco, EMC, Dell, HP, IBM, and Oracle. While these systems have been on the market for more than a year now, revenues are still limited (depending upon whom one talks to, revenues may now exceed $1 billion globally) and adoption moving slowly. Truly integrated systems do result in productivity, time and cost savings and IT executives should be piloting them in 2014 to determine the role and value they can play in the corporate data centers.

Internet of things – More and more sensors are being employed and imbedded in appliances and other products, which will automate and improve life in IT and in the physical world. From an data center information management (DCIM), these sensors will enable IT operations staff to better monitor and manage system capacity and utilization. 2014 will see further advancements and inroads made in this area.

Linux/open source – The trend toward Linux and open source technologies continues with both picking up market share as IT shops find the costs are lower and they no longer need to be dependent upon vendor-provided support. Linux and other open technologies are now accepted because they provide agility, choice, and interoperability. According to a recent survey, a majority of users are now running Linux in their server environments, with more than 40 percent using Linux as either their primary server operating system or as one of their top server platforms. (Microsoft still has the advantage in the x86 platform space and will for some time to come.) OpenStack and the KVM hypervisor will continue to acquire supporting vendors and solutions as players look for solutions that do not lock them into proprietary offerings with limited ways forward. A Red Hat survey of 200 U.S. enterprise decision makers found that internal development of private cloud platforms has left organizations with numerous challenges such as application management, IT management, and resource management. To address these issues, organizations are moving or planning a move to OpenStack for private cloud initiatives, respondents claimed. Additionally, a recent OpenStack user survey indicated that 62 percent of OpenStack deployments use KVM as the hypervisor of choice.

Outsourcing – IT executives will be looking for more ways to improve outsourcing transparency and cost control in 2014. Outsourcers will have to step up to the SLA challenge (mentioned in the People and Process Trends 2014 blog) as well as provide better visibility into change management, incident management, projects, and project management. Correspondingly, with better visibility there will be a shift away from fixed priced engagements to ones with fixed and variable funding pools. Additionally, IT executives will be pushing for more contract flexibility, including payment terms. Application hosting displaced application development in 2013 as the most frequently outsourced function and 2014 will see the trend continue. The outsourcing of ecommerce operations and disaster recovery will be seen as having strong value propositions when compared to performing the work in-house. However, one cannot assume outsourcing is less expensive than handling the tasks internally.

Software defined x – Software defined networks, storage, data centers, etc. are all the latest hype. The trouble with all new technologies of this type is that the initial hype will not match reality. The new software defined market is quite immature and all the needed functionality will not be out in the early releases. Therefore, one can expect 2014 to be a year of disappointments for software defined solutions. However, over the next three to five years it will mature and start to become a usable reality.

Storage - Flash SSD et al – Storage is once again going through revolutionary changes. Flash, solid state drives (SSD), thin provisioning, tiering, and virtualization are advancing at a rapid pace as are the densities and power consumption curves. Tier one to tier four storage has been expanded to a number of different tier zero options – from storage inside the computer to PCIe cards to all flash solutions. 2014 will see more of the same with adoption of the newer technologies gaining speed. Most data centers are heavily loaded with hard disk drives (HDDs), a good number of which are short stroked. IT executives need to experiment with the myriad of storage choices and understand the different rationales for each. RFG expects the tighter integration of storage and servers to begin to take hold in a number of organizations as executives find the closer placement of the two will improve performance at a reasonable cost point.

RFG POV: 2014 will likely be a less daunting year for IT executives but keeping pace with technology advances will have to be part of any IT strategy if executives hope to achieve their goals for the year and keep their companies competitive. This will require IT to understand the rate of technology change and adapt a data center transformation plan that incorporates the new technologies at the appropriate pace. Additionally, IT executives will need to invest annually in new technologies to help contain costs, minimize risks, and improve resource utilization. IT executives should consider a turnover plan that upgrades (and transforms) a third of the data center each year. IT executives should collaborate with business and financial executives so that IT budgets and plans are integrated with the business and remain so throughout the year.